Duration

Sept 2024 - Dec 2024

My Role

Extensive UX Research, UX Writing, Iterating designs based on feedback, Design Handoff to Developers

Team

2 UX Designers 2 Product Managers

Currently in Development

The project is actively being developed, with key features being refined.

Potential Increased Adoption Rate

We foresee a 40% increase in adoption rate, driven by the improvements in usability.

It's a company-wide, LLM–based chat system that allows employees to interact with AI for a wide range of use cases.

It answers company-specific questions, assisting with documentation, synthesizing reports, and more. To make these interactions more efficient, it also supports the creation of persistent, task-specific assistants. These assistants help employees maintain continuity in their AI workflows.

Users were stuck in a loop: re-uploading documents, retyping context, and repeating instructions every time they interacted with AI

To tackle these issues, we followed a structured design process over the course of 3 months.

We set out to design buildable assistant framework: a way for users to…

♻️

Create Once, Re-use Always

Create assistants once and reuse them continuously.

🎚️

Choose your Path

Choose between conversational or form-based creation.

🧪

Test & Refine

✅

We believed a dual-path creation model (guided vs manual) with persistent memory would unlock long-term value and deepen user trust in AI.

To understand expectations better and shape our direction, we:

Conducted moderated usability tests with the existing builder

Benchmarked tools like ChatGPT, ClaudeAI, Perplexity, Cohere, Postgres, HuggingChat

From 10+ Users across 5+ Departments and rest of the research, we identified the following themes

🎯

🤖

“Bot as builder” was a promising pattern, but often unsupported.

💬

Form experiences worked better when labels felt like questions.

Following our research and co-design workshops, we generated a wide range of ideas which we needed to strategically prioritize.

To move forward, we needed to identify what to build first. We conducted a 3-axis prioritization exercise, scoring each idea based on:

User Impact: How much value it would bring to employees.

Design Effort: Time and complexity to prototype and test.

Development Effort: Engineering cost and feasibility.

This helped us map ideas into quick wins vs. long-term investments.

Idea Prioritization based on Scoring User Impact, Design & Dev Effort

Translating Insights into an Intuitive Assistant-Building Experience

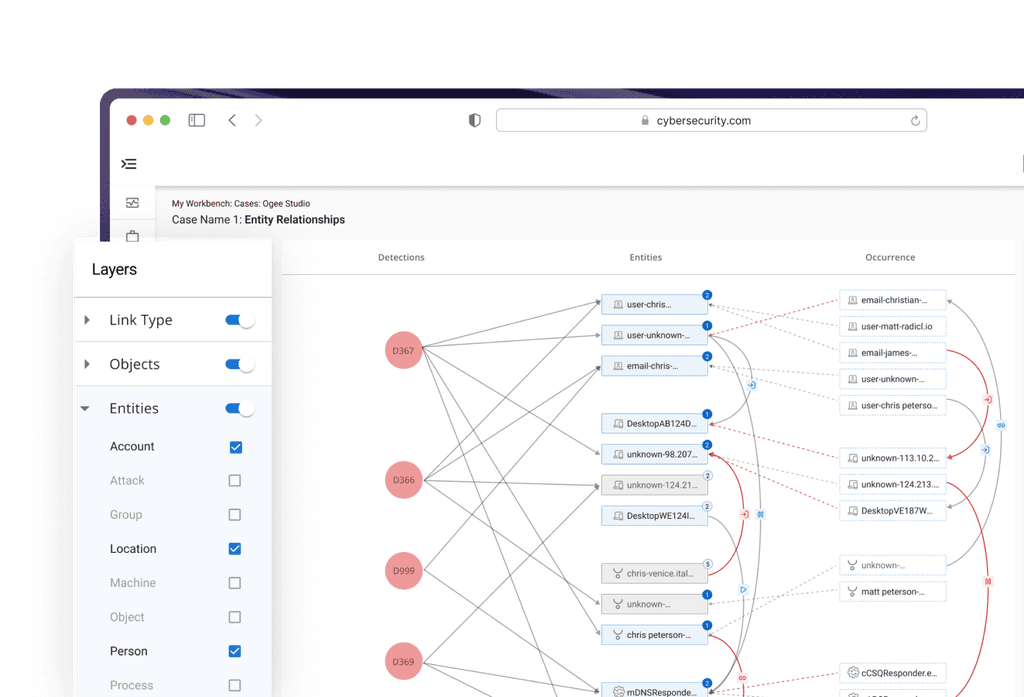

Flowchart for Creating Assistant

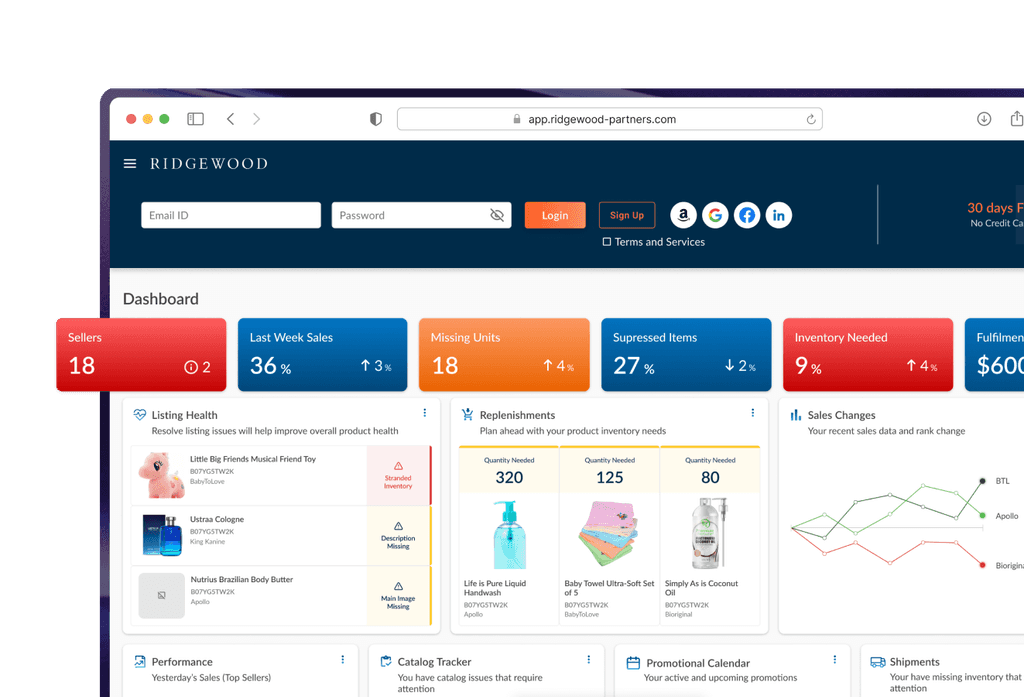

Final Design for Creating Assistant

Our goal was to reduce friction and cognitive load without sacrificing flexibility. So we took some key decisions to deliver clarity at every step

While we aimed to restructure the assistant flow for long-term scalability, we received pushback from the business

We were working towards reorganizing IA and making space for advanced capabilities. But the sprint focused towards shipping refinements for immediate rollout.

So we were holding off on foundational improvements we felt were critical for scale. Still, we optimized what we could, ensuring the experience remained clear, flexible, and ready for future evolution.

I would have loved to explore more intelligent and proactive capabilities that go beyond static assistant creation

Suggesting assistant creation based on frequent repeated queries, providing help even before they ask.

Moving towards an Agentic Assistant model, where users can pull context and respond proactively.

How this project is helping me grow as a designer?

01

Prompt Design is UX too

02

Users Need Trust Cues